Docker hero? Check yourself!

August 31, 2023

13 views

Share:

Get tips and best practices from Develeap’s experts in your inbox

A story of one Dockerfile

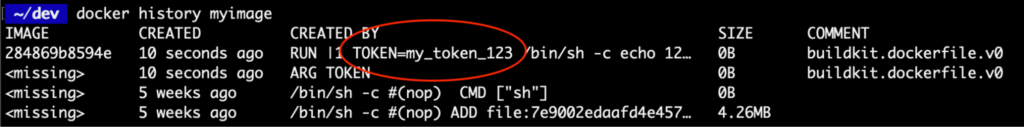

The story below describes a progressive work on one Dockerfile. You will face a few real-life situations when a Docker build process was not trivial. We will touch on performance, security, and workflow optimization scopes.

When you read these use cases, ask yourself about each one: What would I do? What is the best practice here? Do I see any issues?

For each situation, I provide an answer, which, in my experience, uncovers the best practice to solve the challenge within the Dockerfile. You’re invited to compare our solutions!

Afterword

What every James should know?

- Don’t optimize what’s already working unless you clearly understand the benefit and the outcome.

- After applying changes – always test everything.

I hope you enjoyed reading and learned something new, but even if you knew everything – you’ve just confirmed that you are the true Docker Hero!